Let’s be honest: innovation in highly regulated industries usually feels like driving a Ferrari with the handbrake on.

For the last decade, the financial sector has been stuck in “pilot purgatory.” We’ve all seen the press releases. Banks love to announce they are experimenting with AI. But when it comes to actually deploying these models into core operations—where they touch real money and real risk—the momentum usually dies.

Why? Because the cost of getting it wrong is too high.

A new development from digital banking platform Plumery AI is attempting to release that handbrake. They call it “AI Fabric,” and it addresses the single biggest friction point for founders and CTOs in fintech: How do you move fast without breaking the rules?

The “Data Spaghetti” Problem

To understand the solution, we have to respect the problem. Most established financial institutions are sitting on a goldmine of data, but it’s trapped in fragmented, legacy silos. It’s what industry insiders call a “data estate,” but in reality, it often looks more like spaghetti code.

Every time a bank wants to integrate a new Generative AI tool, they have to build a custom bridge to that data. This triggers endless security reviews, governance approvals, and integration headaches. It is slow, expensive, and risky.

As Ben Goldin, Plumery’s founder, pointed out, institutions want production use cases that actually improve operations. They don’t want “another AI layer on top of fragmented systems.” They need the plumbing to be fixed.

Enter the “Fabric” Approach

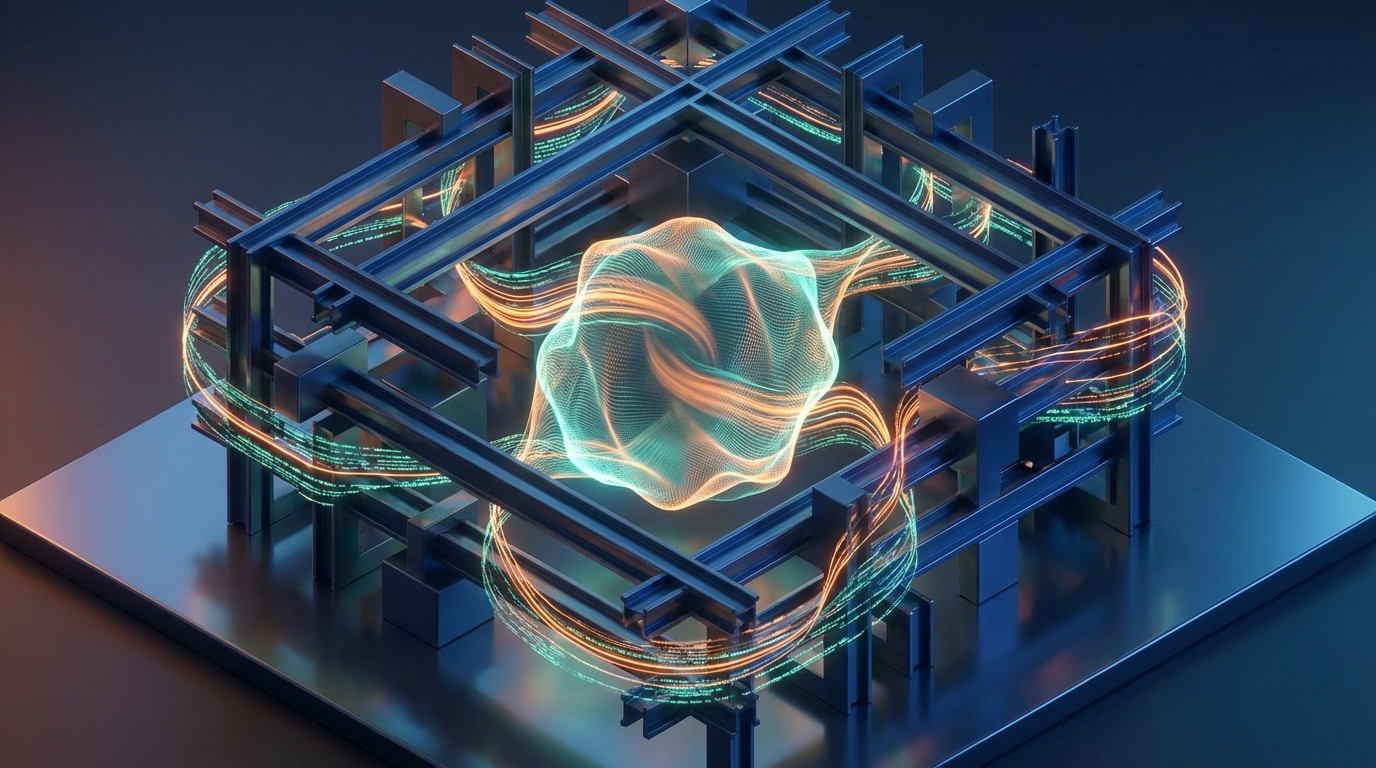

Plumery’s solution isn’t just another chatbot; it’s an architectural shift. Instead of bespoke integrations, AI Fabric acts as a standardized framework.

Think of it as a universal adapter. It uses an API-first, event-driven architecture to connect AI models directly to core banking data, but—and this is the crucial part—it does so with governance baked in.

Here is why this matters for decision-makers:

- Decoupling: It separates your systems of record (the old, reliable ledgers) from your systems of intelligence (the new, fast AI). You can innovate on one side without destabilizing the other.

- Reusability: Once the data is in this “fabric,” it becomes a reusable stream. You don’t need to build a new pipeline for fraud detection, then another for customer service, and another for credit scoring. You build it once, govern it, and reuse it.

- Auditability: In banking, if you can’t explain why an AI made a decision, you can’t use it. This framework is designed to make data streams traceable, satisfying the heavy gaze of regulators.

The Shift from Experiment to Engine

We are already seeing the giants like Citibank and Santander use AI for “safe” tasks like customer service chatbots or background fraud detection. But the real ROI lies deeper—in predictive analytics for loan defaults or dynamic credit scoring.

However, getting there requires a shift in mindset. We are moving away from the era of “move fast and break things” toward “move fast with better brakes.”

Platforms that focus on the orchestration layer—handling how data moves and is governed—are becoming the new standard. It’s no longer about who has the smartest AI model; it’s about who has the infrastructure to run that model safely at scale.

The Bottom Line

For business leaders, the takeaway is clear: Governance is no longer the enemy of speed; it is the prerequisite for it.

Tools like AI Fabric suggest that the next wave of fintech won’t be defined by flashy features, but by robust architectures that allow banks to finally trust their own data. The institutions that solve this operational puzzle are the ones who will finally drive that Ferrari off the lot.