For most business leaders and founders, the frustration is familiar: you invest in an AI pilot, it looks promising in the boardroom, and then it dies in “Proof-of-Concept Purgatory.” It never quite bridges the gap to becoming a daily operational asset.

The UK government just announced a move that might serve as the blueprint for breaking that cycle.

In a partnership announced today with Anthropic, the Department for Science, Innovation, and Technology (DSIT) isn’t just buying another chatbot. They are deploying Agentic AI to modernize how citizens interact with the state. For private sector leaders observing from the sidelines, this isn’t just public sector news—it is a case study in digital maturity.

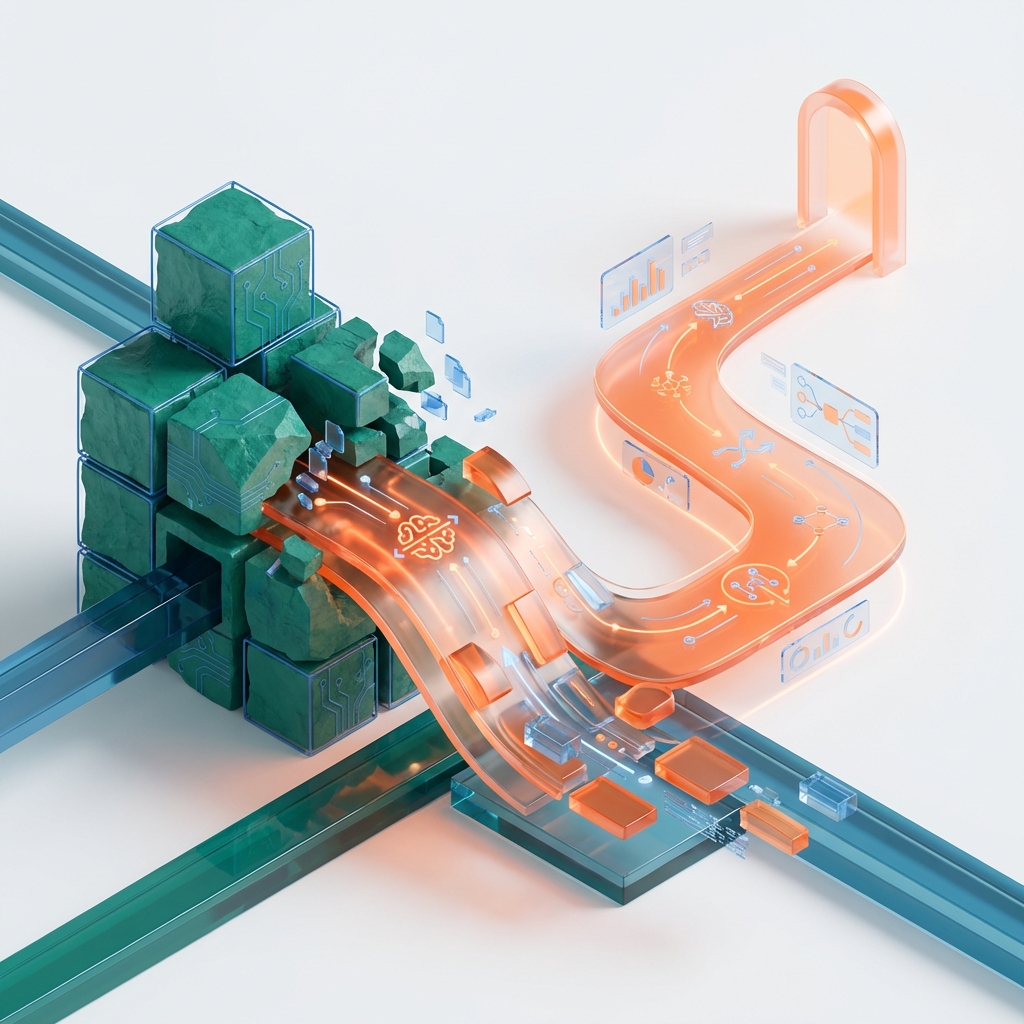

From Retrieval to Action

The biggest friction point in digital services—whether you are selling software or processing unemployment claims—is the gap between information and action.

Standard chatbots are great at retrieving data. They can tell a user where to find a form. But they fail when the user lacks the specific domain knowledge to understand which form they actually need. This creates cognitive load, leading to drop-offs and support tickets.

The UK’s initiative prioritizes “agentic” systems powered by Claude. Unlike a passive bot, an agent is designed to actively guide users through complex workflows. It doesn’t just dump links; it holds the user’s hand through the process.

The Power of “Stateful” Interactions

Why start with employment services? Because job seeking is a journey, not a one-off transaction. It requires context.

The new system creates a “stateful” interaction. It remembers previous inputs, allowing a user to pause and resume without starting from zero. In user experience terms, this respects the user’s time and significantly lowers the barrier to completion.

For founders, the lesson is clear: if your AI doesn’t remember the context of the conversation, you aren’t building a relationship; you’re just building a search bar.

Solving the Vendor Lock-In Trap

Perhaps the most strategic component of this deal is how the build is structured. It is not a traditional outsourced black box.

Anthropic engineers are set to work alongside civil servants. The explicit goal is knowledge transfer. The government is treating AI competence as a core operational asset to be owned, not a commodity to be rented.

This approach directly addresses the risk of vendor lock-in. By building internal expertise during the deployment phase, the organization ensures it can maintain and evolve the system long after the initial consultants have left.

The “Scan, Pilot, Scale” Framework

Rolling out Generative AI in a high-stakes environment requires a risk-averse strategy. The project is using a “Scan, Pilot, Scale” framework.

This iterative methodology forces validation of safety protocols before wider release. For business owners, this is the disciplined alternative to “move fast and break things.” When user trust and data sovereignty are on the line, the only way to move fast is to move deliberately.

The Bottom Line: Successful AI integration is becoming less about which model you choose and more about the governance, data architecture, and internal capability you build around it.