Generative AI’s “honeymoon phase” is officially over. For the last two years, we watched businesses scramble to deploy chatbots that could answer questions but rarely moved the needle on revenue. Leaders were left managing high expectations with tools that felt more like novelties than operational assets.

That narrative has flipped.

New data analyzing over 20,000 organizations reveals that the market has turned a critical corner. We are no longer just asking AI to retrieve information; we are building Agentic Systems that independently plan, execute, and finish the job. This isn’t a subtle drift—it’s a fundamental restructuring of how digital business gets done.

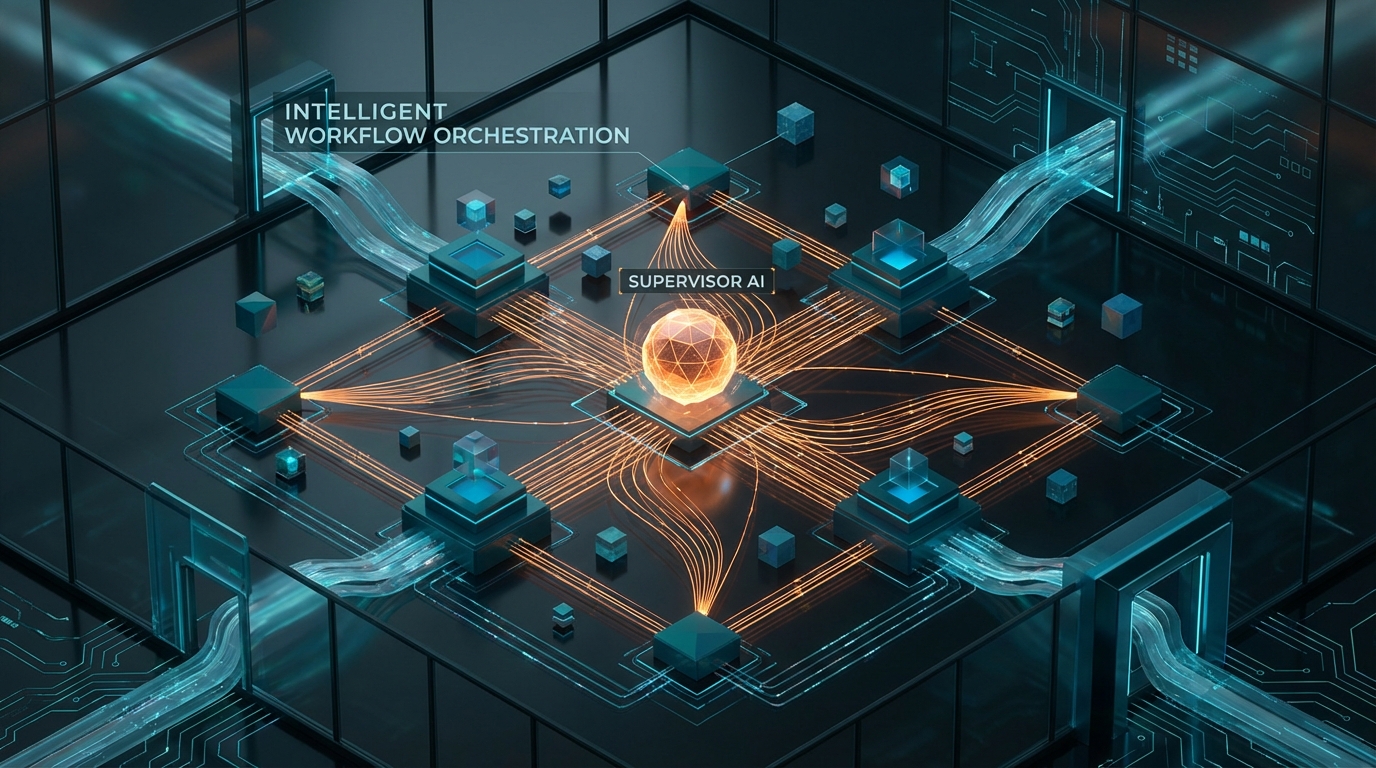

The Rise of the “Supervisor Agent”

The most fascinating development isn’t faster chips or bigger models—it’s a shift in organizational behavior within the software itself. Between June and October 2025, multi-agent workflows surged by 327%.

Driving this explosion is a concept called the Supervisor Agent.

Think of the early AI implementations as eager interns: they tried to do everything themselves and often got confused. The Supervisor Agent acts like a seasoned manager. It doesn’t perform every task. Instead, it acts as an orchestrator—breaking down complex objectives, detecting intent, and delegating specific sub-tasks to specialized tools.

Since its launch, this “managerial” model has captured 37% of all agent usage. It mirrors successful human hierarchies: verified delegation yields better results than solitary effort.

AI is Now Building AI

If you want to understand the scale of automation, look at the infrastructure. The underlying “plumbing” of the internet—databases and testing environments—is no longer being built solely by human hands.

- Two years ago: AI agents created just 0.1% of databases.

- Today: AI agents create 80% of them.

Furthermore, 97% of testing environments are now spun up by agents. This allows developers to create and destroy ephemeral testing grounds in seconds rather than hours, radically accelerating the “idea-to-deployment” loop.

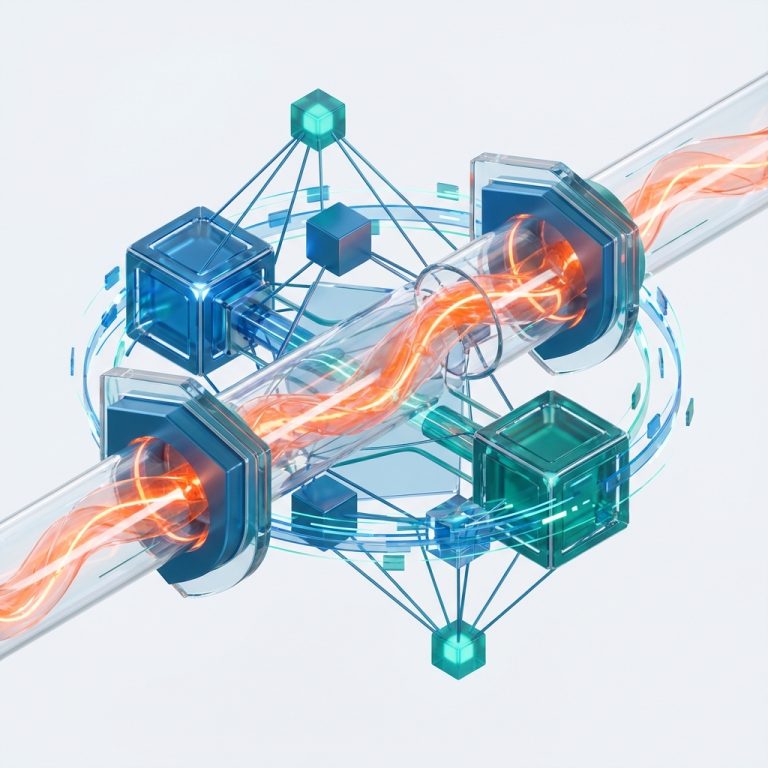

The Multi-Model Safety Net

Business leaders have a deep-seated fear of vendor lock-in, and rightfully so. The data shows a strategic pivot to mitigate this risk. Companies are no longer betting the farm on a single Large Language Model (LLM).

As of late 2025, 78% of organizations are running multi-model strategies, utilizing two or more model families (like combining ChatGPT with Claude or Llama). This diversity allows engineering teams to route simple, low-value tasks to cheaper models while reserving the expensive, “heavy lifter” models for complex reasoning. It’s a sophisticated balancing act between performance and cost.

The Governance Paradox

Here is the most counter-intuitive finding for the C-suite: Rules make you faster.

Governance is often viewed as the enemy of speed—red tape that slows down innovation. However, the metrics prove the exact opposite. Organizations that implemented rigorous AI governance and evaluation tools put 12 times more projects into production than those that didn’t.

Why? Because certainty eliminates hesitation. When you have guardrails defining data use and rate limits, stakeholders have the confidence to say “yes.” Without them, pilots die in the “Proof of Concept” purgatory, stalled by unquantified risks.

The Bottom Line

The conversation has moved from “magic” to engineering rigor. The businesses seeing real returns aren’t the ones waiting for a smarter chatbot; they are the ones building open, interoperable platforms where AI agents run the routine, mundane, and critical parts of their infrastructure.

The pilot phase is finished. The operational reality is here.